Why is the Python code not implementing on GPU? Tensorflow-gpu, CUDA, CUDANN installed - Stack Overflow

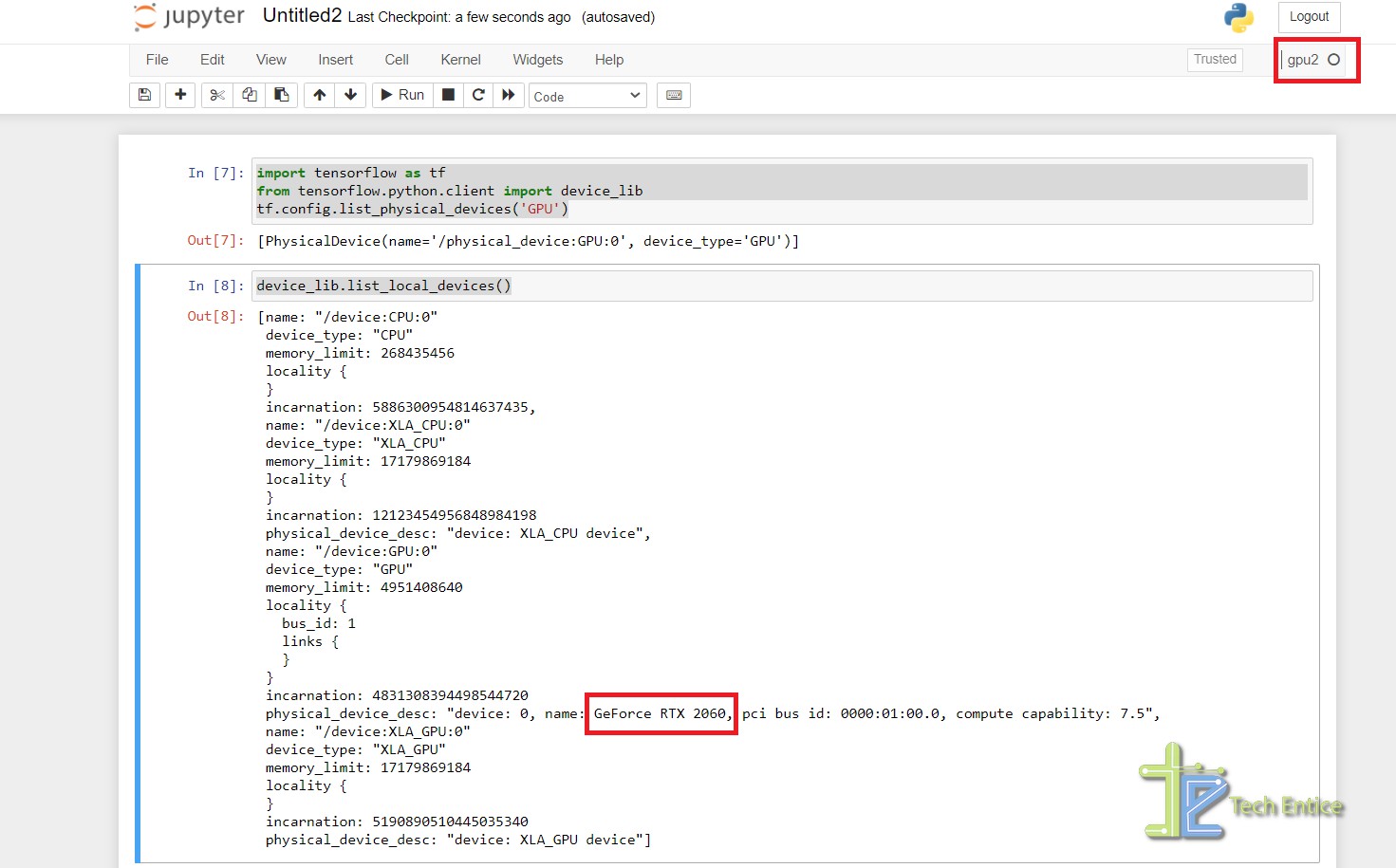

![Azure DSVM] GPU not usable in pre-installed python kernels and file permission(read-only) problems in jupyterhub environment - Microsoft Q&A Azure DSVM] GPU not usable in pre-installed python kernels and file permission(read-only) problems in jupyterhub environment - Microsoft Q&A](https://learn-attachment.microsoft.com/api/attachments/98481-image.png?platform=QnA)

Azure DSVM] GPU not usable in pre-installed python kernels and file permission(read-only) problems in jupyterhub environment - Microsoft Q&A

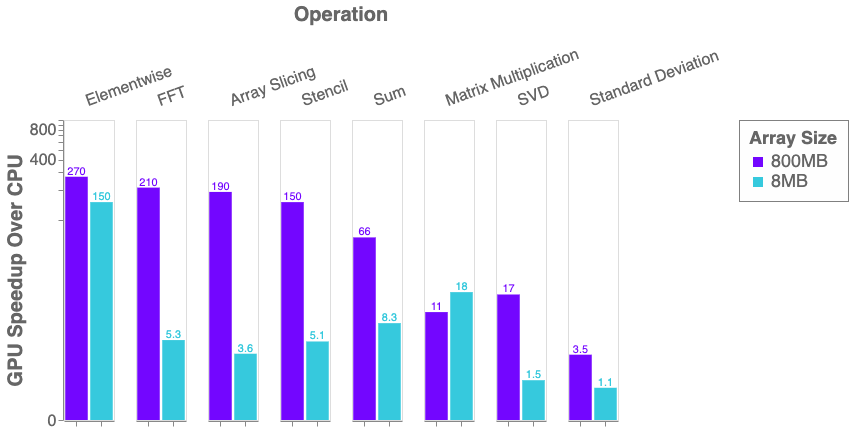

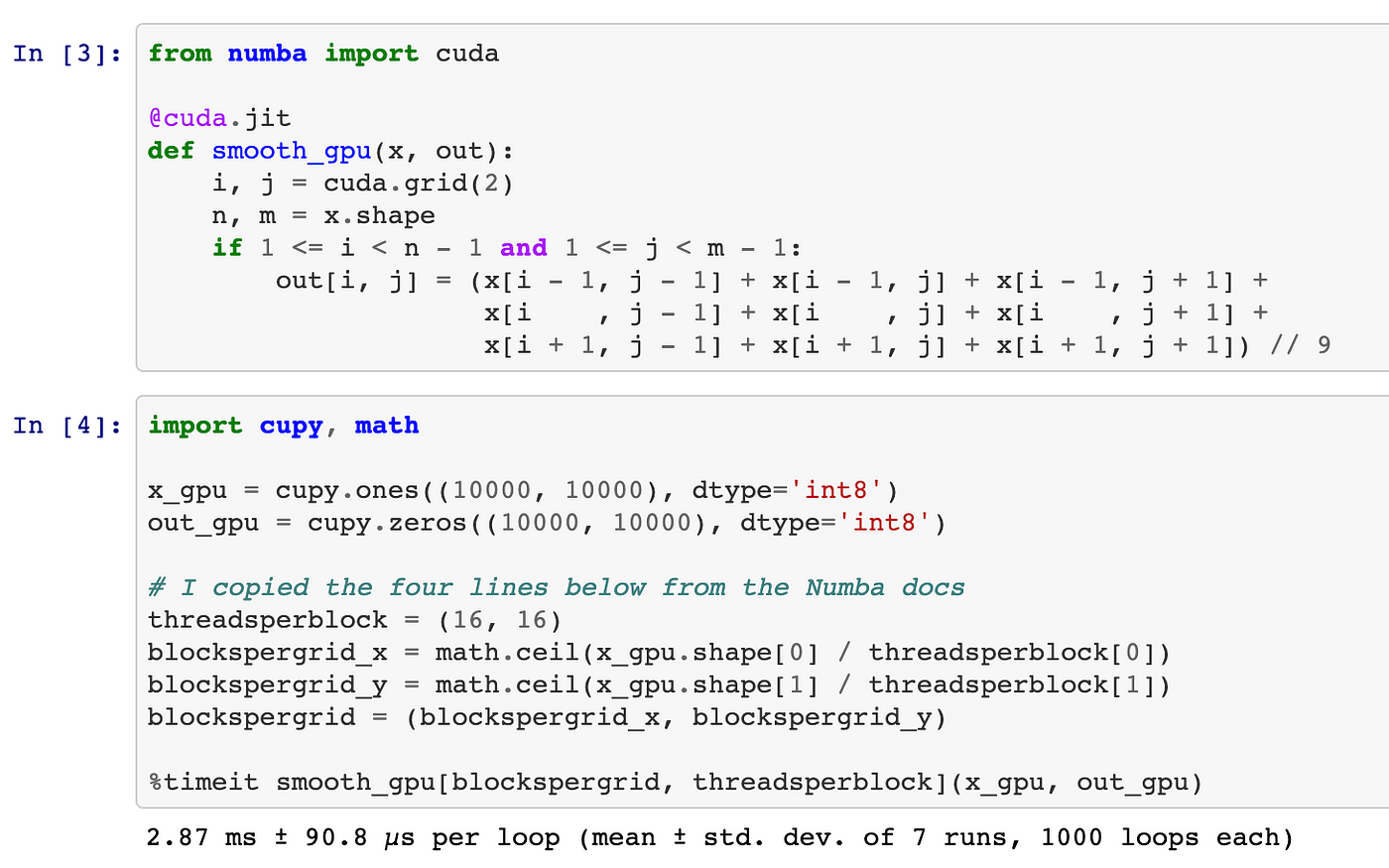

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

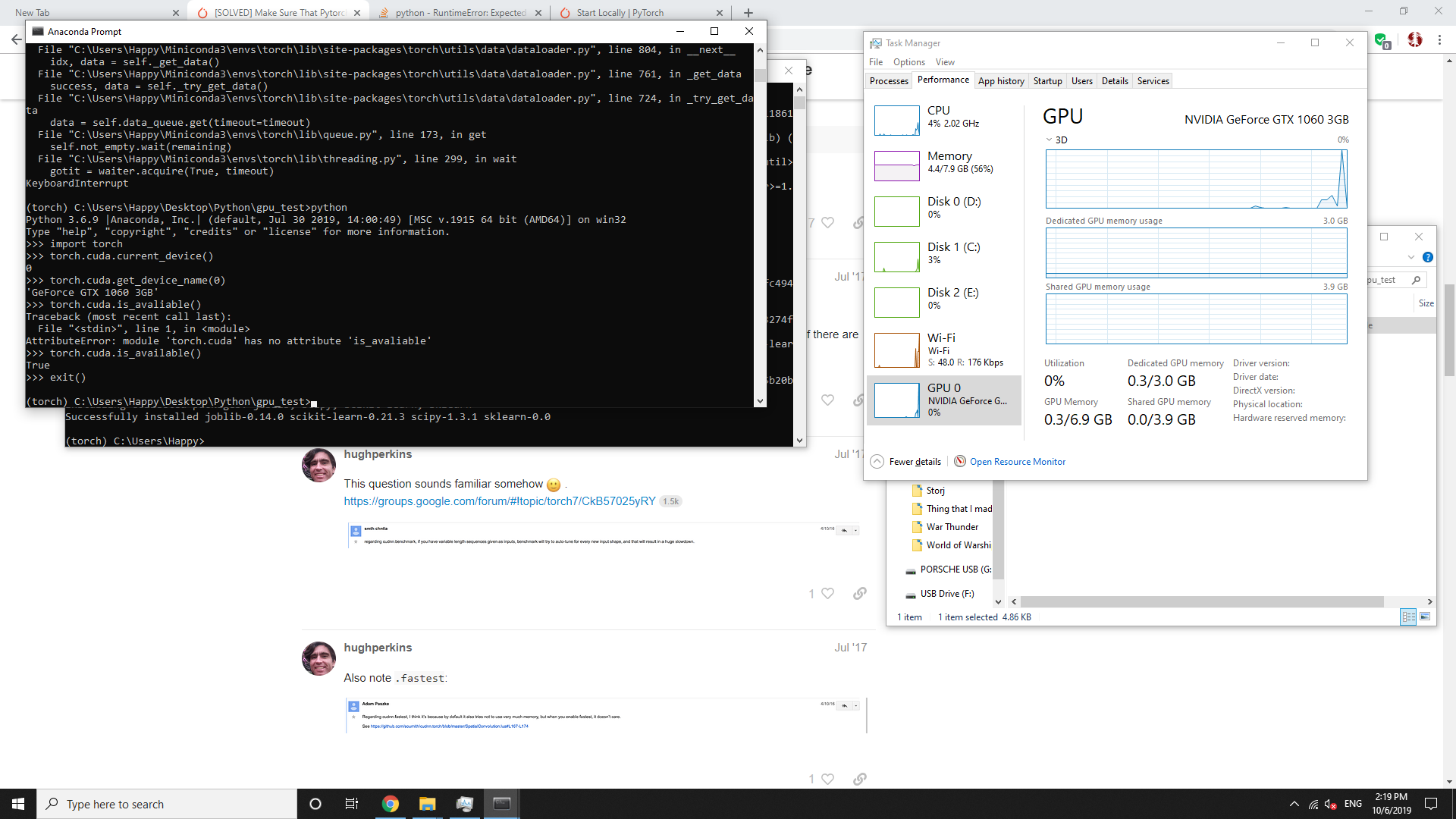

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

How to examine GPU resources with PyTorch | Configure a Jupyter notebook to use GPUs for AI/ML modeling | Red Hat Developer

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python | Cherry Servers